Introduction: The Robot in the Room

With each viral video of a new humanoid robot from companies like Boston Dynamics, the public excitement intensifies. We see machines that can run, jump, and handle objects with increasing grace, and it’s easy to assume that a future of robotic assistance is just a few manufacturing cycles away. The hardware, it seems, has arrived.

But this perception misses the bigger picture. The most significant hurdles and surprising truths about our robotic future lie not in the mechanics of the body, but in the complex software, scarce data, and critical ethics that will be required to truly bring these machines to life. The real challenge has shifted from building the robot to building its mind.

We’ve Built the Perfect Body, But We’re Still Searching for a Brain

For decades, the primary challenge in robotics was a mechanical one: building a machine that could navigate the physical world with the agility and balance of a human. While still an impressive feat of engineering, the hardware is no longer the main bottleneck. The frontier has moved from the workshop to the data center. “Boston Dynamics also admitted in their recent video that they spent 30 years making the perfect body, while hoping someone else would eventually make a brain to go with it.”

The current focus of the entire field is on creating the “brain” – the AI and control systems that will animate these advanced bodies. This is not a simple programming task; it’s a profound learning problem. For instance, recent research like the FLAM paper is dedicated to creating a “stabilizing reward function” for the sole purpose of teaching a robot the fundamental skill of maintaining a stable posture. Even learning to stand still is a complex AI challenge that must be learned through interaction, not just engineered with gears and motors.

The AI Revolution is Fueled by Data, and Robots are Starving

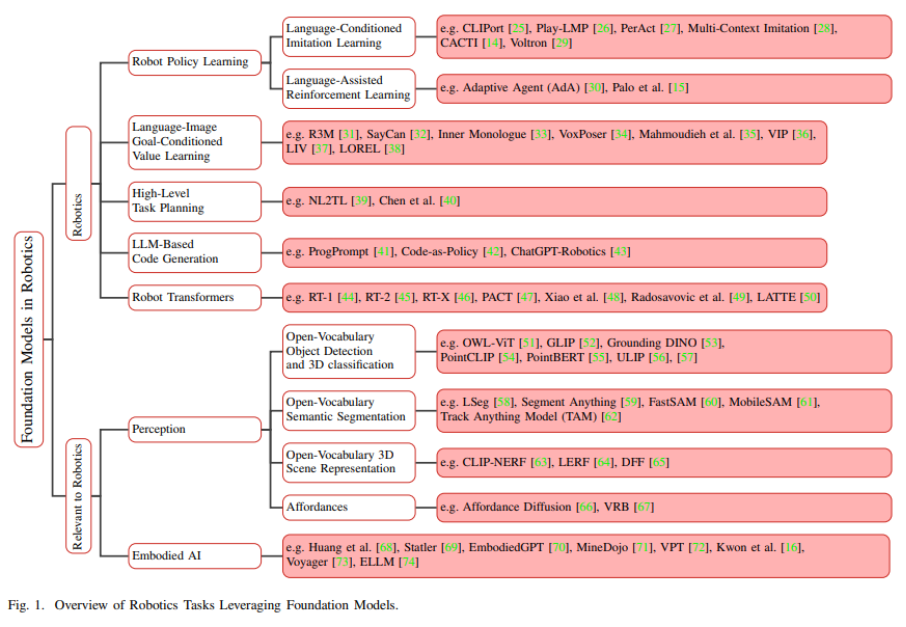

Today’s most powerful AI systems, like the Large Language Models (LLMs) that power chatbots, are known as “foundation models.” They achieve their remarkable capabilities by training on vast, internet-scale datasets containing trillions of words and images. This abundance of data is the fuel for the AI revolution.

Robotics, however, is running on empty. A comprehensive academic survey on foundation models in robotics makes it clear: robot-specific training data is incredibly scarce. This is a counter-intuitive but critical problem. You can’t just scrape the web for billions of examples of a robot successfully loading a dishwasher, navigating a cluttered hallway, or folding laundry. This lack of relevant, real-world interaction data is a fundamental barrier to creating a general-purpose “GPT for Robotics” and remains one of the field’s most significant open challenges.

We’re Not Programming Robots – We’re Teaching Them Like Toddlers

The classic sci-fi image of a programmer typing lines of code to command a robot – walk forward, pick up object – is becoming a relic of the past. The modern approach to robotics is not about explicit programming, but about learning.

Researchers are teaching robots in a way that is conceptually similar to how a toddler learns to balance and walk: through trial, error, and feedback. The FLAM research paper exemplifies this shift. It uses a reward system that encourages the robot to discover and learn stable postures on its own. This is part of a broader move toward techniques like “reinforcement learning” (learning from environmental interactions) and “imitation learning” (learning by observing human demonstrations), as noted in academic surveys of the field. Instead of writing instructions, developers are creating the conditions for the robot to teach itself.

Your Messy Living Room is a Bigger Challenge Than a High-Tech Factory

While we might imagine humanoid robots first appearing in our homes, the reality is that domestic environments are their final frontier. A market forecast from Digitimes lays out a clear timeline for adoption based on environmental complexity:

- Short-term (3-5 years): Manufacturing

- Mid-term (5-10 years): Service

- Long-term (10+ years): Household

The reason for this progression is simple: predictability. Manufacturing environments are described as “enclosed and characterized by predictable, standardized procedures.” A factory is a controlled world where a robot can perform repetitive tasks with minimal surprises.

In contrast, your home is an environment of near-infinite variability. It is unpredictable, complex, and unique. Operating successfully in a household requires “advanced autonomous planning and exceptional hand dexterity” to manage a constantly changing landscape of objects, people, and pets. This makes your living room one of the most difficult and sophisticated environments a robot will ever have to master.

The Race for ‘Trustworthy AI’ is as Important as the Race for Hardware

As humanoid robots become more capable, public concerns – sometimes jokingly referenced in online forums with terms like “Skynet” – highlight a crucial parallel field of development: AI ethics. Building a safe and beneficial robotic future isn’t just about technical prowess; it’s about establishing trust.

The European Parliament has formalized this goal with its guidelines for “Trustworthy AI,” defining it with three core components: an AI system must be lawful, ethical, and robust. To make this concept concrete, the guidelines outline seven key requirements that AI systems should meet. These include:

- Human agency and oversight: Ensuring that humans can oversee and intervene when necessary.

- Accountability: Establishing clear mechanisms for responsibility for the AI system and its outcomes.

- Transparency: Making the system’s operations understandable and traceable.

These are not abstract ideals; they are practical necessities for a machine operating in the chaos of a family kitchen. How does “accountability” work when a robot breaks a family heirloom, or what does “transparency” mean when it makes a decision a homeowner doesn’t understand? These ethical frameworks are not an afterthought; they are being developed in parallel with the technology itself. Official guidelines explicitly raise concerns about the potential misuse of AI, including “citizen scoring” and the development of “lethal autonomous weapons,” underscoring that the race to build a safe, ethical AI is just as critical as the race to build better hardware.

Conclusion: More Than Just Nuts and Bolts

The journey toward a future with humanoid robots has evolved far beyond a simple hardware challenge. The gleaming metal bodies are here, but the real work lies ahead. We are now facing a profound software, data, and ethical puzzle that will define the next chapter of artificial intelligence. The key breakthroughs will not be in stronger joints or faster actuators, but in smarter algorithms, better data, and a deeper commitment to responsible innovation.

As we solve the immense technical challenges of building a robot’s mind, are we prepared to answer the even harder questions about its purpose and principles?

Further reading

- Boston Dynamics & Google DeepMind Form New AI Partnership to Bring Foundational Intelligence to Humanoid Robots: https://bostondynamics.com/blog/boston-dynamics-google-deepmind-form-new-ai-partnership

- High-Level Expert Group On Artificial Intelligence Set Up By The European Commission : https://www.europarl.europa.eu/cmsdata/196377/AI%20HLEG_Ethics%20Guidelines%20for%20Trustworthy%20AI.pdf

- Foundation Models in Robotics: Applications, Challenges, and the Future: https://arxiv.org/pdf/2312.07843

- FLAM: Foundation Model-Based Body Stabilization for Humanoid Locomotion and Manipulation : https://arxiv.org/html/2503.22249v1