Vibe Working: Microsoft’s New AI Agents Automate Complex Tasks. But Should You Trust Them Unsupervised?

06/11/2025

Nov 6 , 2025 read

The Core Shift: Defining “Vibe Working” and Agentic Productivity

Microsoft’s introduction of vibe working represents a fundamental evolution in human-AI collaboration. At its core, vibe working is a collaborative, steerable workflow where users articulate high-level outcomes in natural language, and AI agents plan and execute the necessary multi-step operations to achieve them. This paradigm mirrors trends seen in “vibe coding,” (check our article about vibe coding here: https://novelis.io/research-lab/software-3-0-how-large-language-models-are-reshaping-programming-and-applications/) where developers shift from crafting individual code statements to orchestrating higher-order programmatic goals. The result is a user experience that emphasizes iterative orchestration rather than isolated, single-step interactions.

The transition from suggestion-oriented AI to agentic AI underpins this paradigm shift. Microsoft’s new agentic capabilities extend Copilot beyond single-turn chat interactions into a platform that can decompose requests into discrete, testable actions. These agents operate across documents and services, sequencing complex tasks and integrating multiple tools. Unlike earlier Copilot iterations, which primarily offered advice or code snippets, agentic AI executes, verifies, and iterates autonomously under human supervision, effectively bridging the gap between guidance and operational action.

Agent Mode: Orchestration Inside the Office Canvas (Excel and Word)

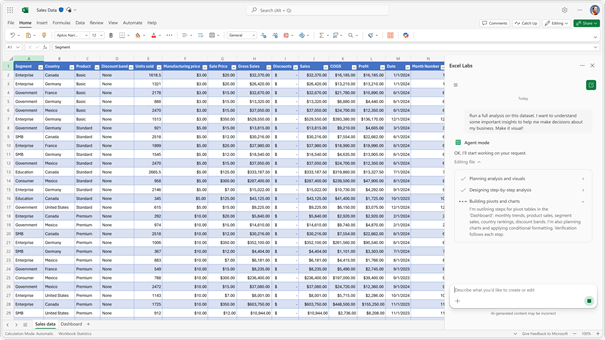

Agent Mode exemplifies how AI can seamlessly work within Microsoft Office, initially available in the web versions of Excel and Word. It allows non-expert users to leverage advanced functionalities that were previously the domain of power users.

In Excel, users can “speak Excel” in natural language. The agent can generate financial reports, build loan calculators with amortization schedules, analyze datasets, construct PivotTables, generate charts, and apply formulas automatically.

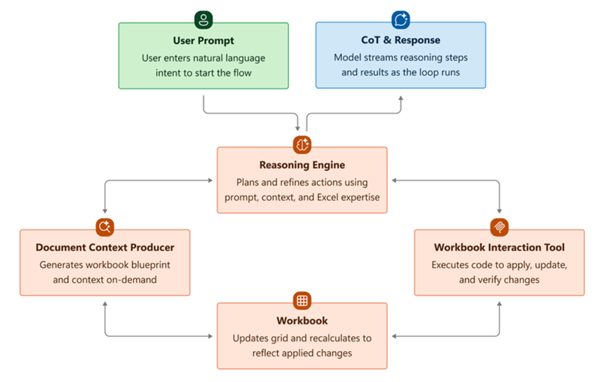

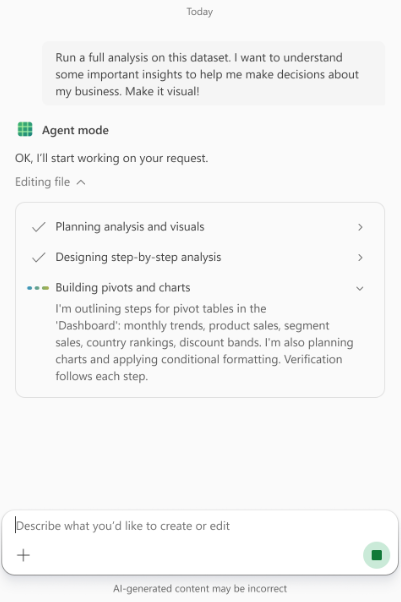

Here’s how it works step by step: users describe a task in natural language; the agent selectively gathers relevant workbook context (tables, formulas, charts, and metadata); it plans a solution using its reasoning engine; executes the plan directly in Excel via APIs; reflects and iterates on results to ensure correctness; validates intermediate outputs for accuracy; and finally delivers a reliable, verifiable workbook. Essentially, it plans, executes, checks, and iterates, acting as an intelligent collaborator rather than just a formula generator.

In Word, Agent Mode supports “vibe writing,” where the AI drafts sections, applies styles, refines tone, and incorporates data from referenced files or emails. Users can track reasoning in the task pane, follow live changes, and intervene at any point. Steps can be paused, reordered, modified, or aborted, keeping the workflow auditable and fully steerable.

Across both apps, Agent Mode turns complex, multi-step workflows into manageable, transparent, and user-driven processes.

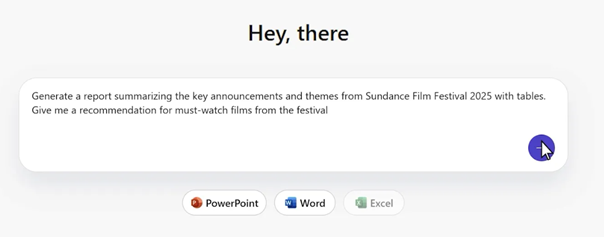

Office Agent: The Chat-First, Multi-Model Drafting Powerhouse

The Office Agent extends agentic productivity into the chat-first interface of Copilot. Specializing in generating PowerPoint presentations and Word documents from conversational prompts, it produces outputs described as polished and professional, often achieving “first-year consultant” quality within minutes. The workflow begins by clarifying user intent, followed by optional web-grounded research, culminating in structured, visually coherent deliverables. For example, prompts can instruct the agent to draft multi-slide executive briefs or customer-ready Word reports outlining project value propositions.

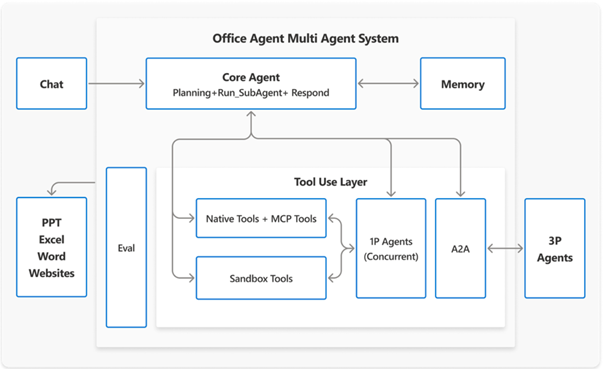

Crucially, Microsoft employs a multi-model strategy to optimize task performance. While OpenAI models power deep, in-app Agent Mode operations, Anthropic’s Claude models are explicitly routed for chat-first, research-heavy generation tasks. This model routing aligns with the principle of using the right model for the right job, balancing responsiveness, knowledge grounding, and stylistic quality.

At its core, the Office Agent is built on a multi-agent orchestration engine where a central planner coordinates tasks and synthesizes results, specialized agents handle domain-specific subtasks like coding, finance, or search in parallel, and a secure tool layer ensures all actions run safely within controlled, sandboxed environments.

The Reality Check: Benefits, Risks, and Governance

The productivity benefits of agentic AI are tangible. Agent Mode dramatically lowers the skill barrier for complex Excel and Word tasks, enabling significant throughput gains. Tasks that previously required hours of manual effort can now be completed in minutes. For enterprises, this shift has organizational implications: AI can free employees from repetitive work, allowing focus on high-value activities, potentially reshaping roles and workflows.

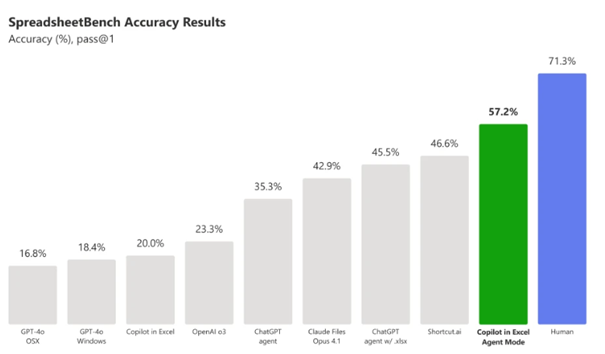

However, the accuracy gap remains a critical concern. On the SpreadsheetBench benchmark, Agent Mode in Excel achieved a 57.2% accuracy rate, exceeding competitors but still falling short of human experts at 71.3%. Consequently, outputs must be treated as drafts subject to human verification. The rapid generation capabilities also introduce the risk of “workslop”, where AI-produced artifacts appear complete but lack substantive rigor, creating friction for colleagues who must correct or augment them.

Governance is paramount, particularly given the multi-model architecture. Routing workloads to Anthropic models may involve infrastructure outside Azure, such as AWS, raising data residency and contractual considerations. Tenant administrators must explicitly authorize Anthropic use, evaluate contractual obligations, and implement controls through the Microsoft 365 Admin Center and Copilot Control System. Mitigations include requiring human sign-off for sensitive documents, restricting Anthropic model usage to low-risk scenarios, and maintaining detailed logs of agent plans and intermediate artifacts for auditability and incident investigation.

Real-World Lessons from Klarna’s AI Experiment

Klarna’s experience underscores the importance of balancing AI automation with human oversight. In 2022, the Swedish fintech company laid off approximately 700 employees, replacing them with an AI assistant powered by OpenAI. Initially, the AI handled two-thirds of customer service chats, performing tasks equivalent to 700 full-time agents. However, by 2025, Klarna faced increased customer complaints and declining satisfaction scores. CEO Sebastian Siemiatkowski admitted that the company had focused too much on efficiency and cost, leading to lower quality and unsustainable operations. In response, Klarna began rehiring human agents to restore service quality, emphasizing the need for a balance between AI efficiency and human empathy in customer interactions.

Conclusion: Collaborative, Auditable, but Human-Led

Microsoft’s Agent Mode and Office Agent collectively signify a meaningful step toward agentic productivity in Microsoft 365. They deliver measurable speed and accessibility gains, transforming how users interact with complex office workflows. Yet, realizing the potential of vibe working requires a disciplined approach: AI agents must be treated as drafting and scaffolding tools, all high-stakes outputs should undergo human verification, and multi-model routing demands robust governance. The future of work in this paradigm is inherently collaborative rather than fully autonomous, with humans remaining the ultimate arbiters of accuracy, trust, and organizational value.

Further Reading

- Vibe working: Introducing Agent Mode and Office Agent in Microsoft 365 Copilot: https://www.microsoft.com/en-us/microsoft-365/blog/2025/09/29/vibe-working-introducing-agent-mode-and-office-agent-in-microsoft-365-copilot/

- Building Agent Mode in Excel: https://techcommunity.microsoft.com/blog/excelblog/building-agent-mode-in-excel/4457320

- Office Agent – “Taste driven” multi-agent system for Microsoft 365 Copilot: https://techcommunity.microsoft.com/blog/microsoft365copilotblog/office-agent-%E2%80%93-%E2%80%9Ctaste-driven%E2%80%9D-multi-agent-system-for-microsoft-365-copilot/4457397

- Andrej Karpathy: Software Is Changing (Again): https://www.youtube.com/watch?v=LCEmiRjPEtQ

- Company Regrets Replacing All Those Pesky Human Workers With AI, Just Wants Its Humans Back: https://futurism.com/klarna-openai-humans-ai-back

- Workers are irritating colleagues with useless workslop: https://thehustle.co/news/workers-are-irritating-colleagues-with-useless-workslop