The rapid rise of large language models (LLMs) has brought the legal profession to a crucial inflection point. On one side, these tools promise to streamline complex research, speed up drafting, and even transform dense legislative language into structured, usable formats. On the other, their well-documented limitations – particularly around accuracy and reliability – make their role in such a high-stakes field highly contested. This tension between opportunity and risk now sits at the heart of the debate: are LLMs ready to serve as trusted collaborators in legal practice, or must they remain closely supervised instruments – useful, but never fully independent?

Two recent studies capture this debate very well. Hallucination-Free? Assessing the Reliability of Leading AI Legal Research Tools takes a critical look at proprietary AI research platforms, proposing systematic ways to evaluate their strengths and weaknesses. Meanwhile, From Text to Structure: Using Large Language Models to Support the Development of Legal Expert Systems explores a more constructive path, showing how LLMs might help convert legislative text into transparent, rule-based systems that enhance accessibility and precision. Together, these works underscore the dual nature of LLMs in law: at once a source of concern and a driver of innovation.

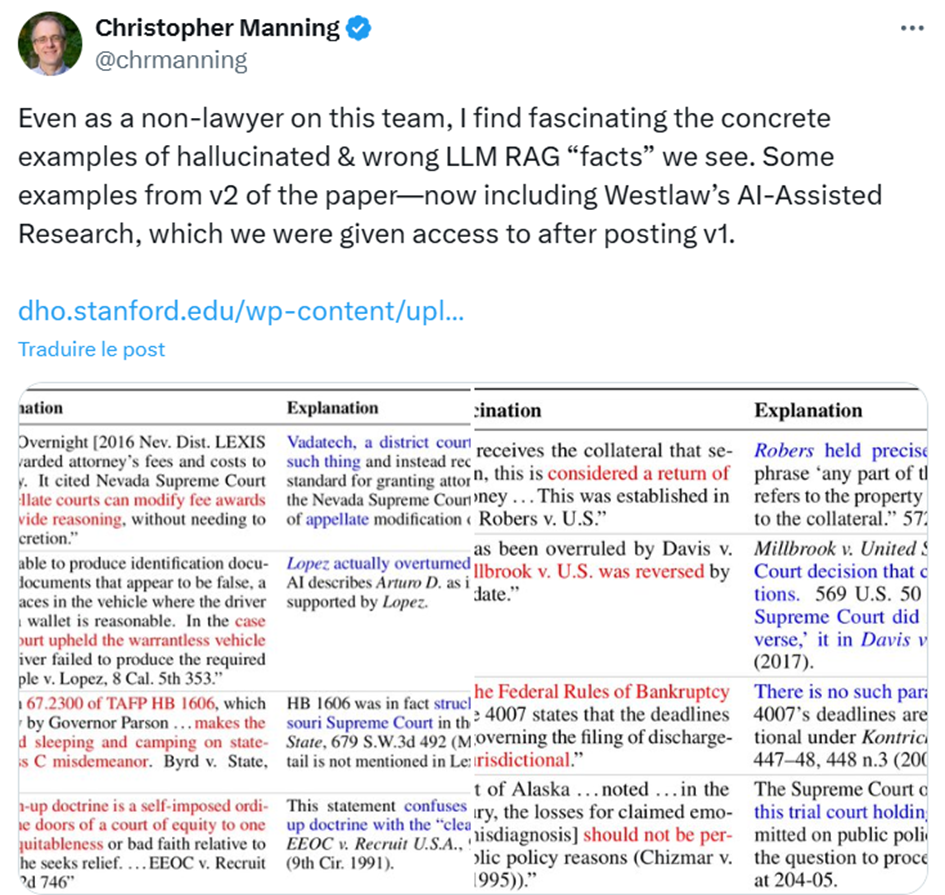

Link : https://x.com/chrmanning/status/1796593594654130230

The Promise: LLMs as Augmenting Legal Intelligence

An optimistic view sees these models as powerful enablers of “augmented intelligence.” This perspective highlights how LLMs can address a key bottleneck in building legal decision-support systems – tools that play a vital role in expanding access to justice.

Streamlining a Key Bottleneck

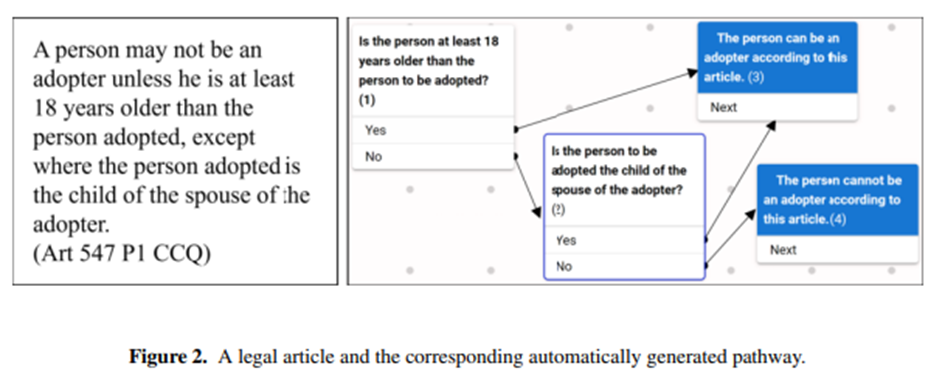

Traditionally, encoding complex legislative text into a structured, rule-based representation – referred to as “pathways” of criteria and conclusions – is a labor-intensive and costly task. Yet this structured data is the foundation for tools like JusticeBot, which help non-lawyers understand how laws apply to them. In this study, researchers used GPT-4 to automate the extraction of pathways from 40 articles of the Civil Code of Quebec.

Encouraging Results

The findings were highly promising, demonstrating the potential of LLMs to act as efficient drafters for legal experts:

- High accuracy and usefulness: GPT-4 achieved 92.5% textual accuracy. More importantly, 90% of the generated pathways were considered useful for decision-support tools – 40% were directly usable, while another 50% required only minor adjustments.

- Performance vs. human annotators: In blind comparisons, 60% of LLM-generated pathways were rated as equal to or better than those created manually. For simpler articles, this figure rose to 77.8%. Remarkably, some outputs even helped human experts detect logical errors in their own work.

- Low hallucination rate: In 87.5% of cases, GPT-4 faithfully adhered to the source text, avoiding the invention of criteria or conclusions.

This approach positions LLMs not as replacements but as collaborators – handling the tedious initial drafting so that legal experts can focus on verification and refinement. The vision is to scale the creation of legal decision-support systems and, in doing so, expand access to justice.

The Peril: The Reality of Persistent Hallucinations

In sharp contrast, the second study – assessing the reliability of leading AI legal research tools – adopted a more cautious stance. It found that, despite bold claims from vendors, these tools continue to suffer from persistent and problematic hallucinations, placing a heavy burden of supervision on legal professionals.

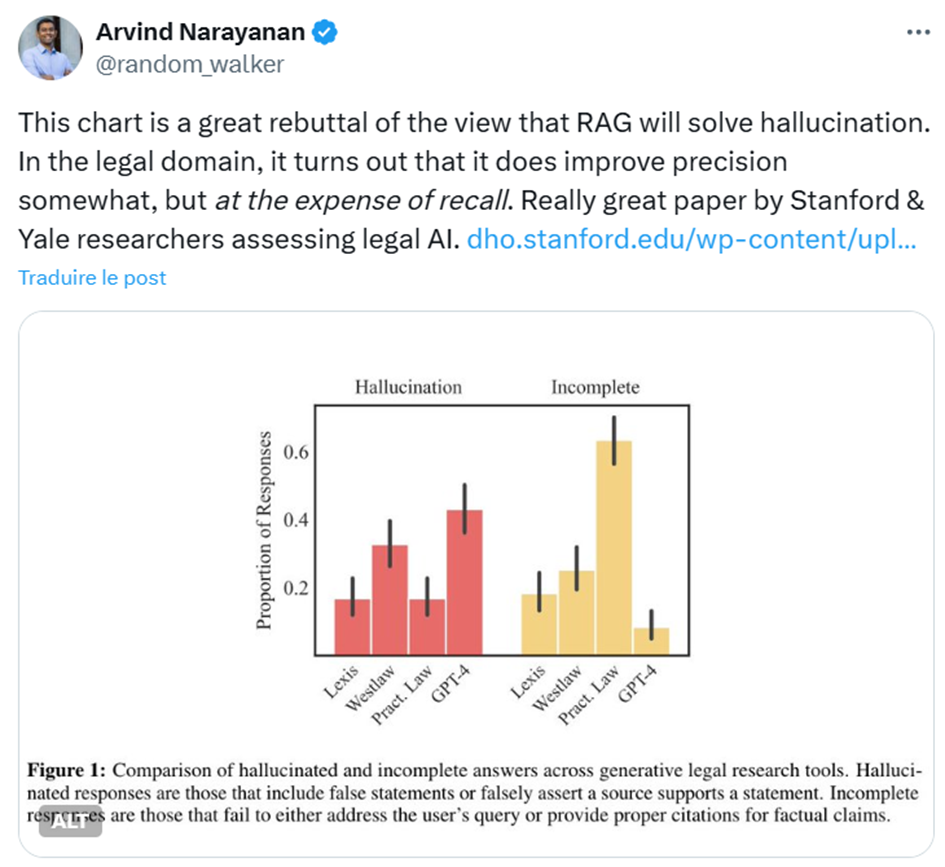

Link: https://x.com/random_walker/status/1796557544241901712

Overstated Vendor Claims and Persistent Hallucinations

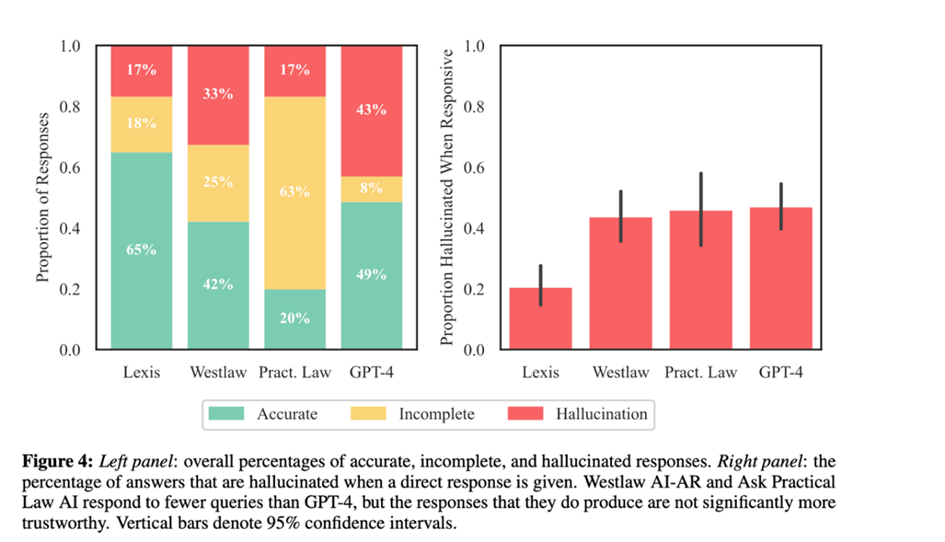

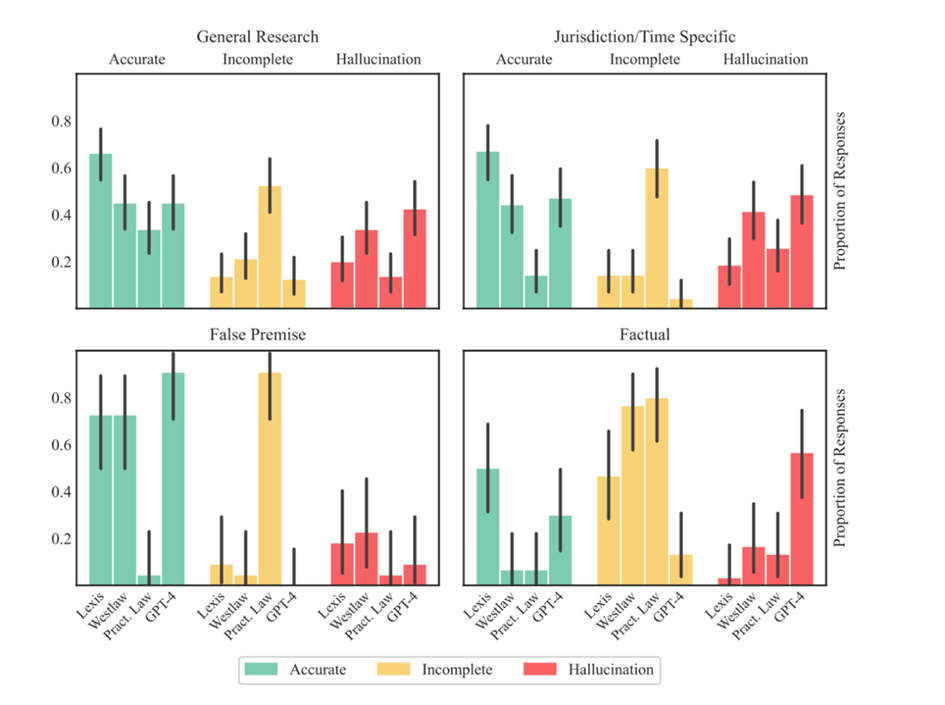

Legal technology providers such as LexisNexis and Thomson Reuters (Westlaw) have advertised their AI-powered research tools as “hallucination-free.”

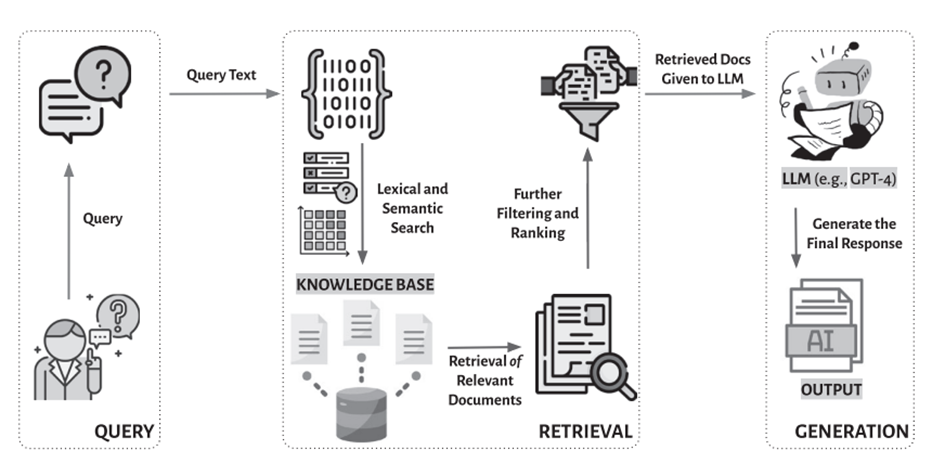

Yet the study challenged these claims, showing that even specialized Retrieval-Augmented Generation (RAG) systems still hallucinate at significant rates.

- Hallucination rates: Lexis+ AI, Westlaw AI-Assisted Research, and Ask Practical Law AI hallucinated between 17% and 33% of the time. While this is an improvement over general-purpose chatbots like GPT-4 (previously shown to hallucinate 58–82% of the time on legal queries), the rates remain troubling in a high-stakes domain.

- Insidious errors: The study expanded the definition of hallucination to include “misgrounded” responses – answers that cite real but irrelevant, overturned, or distorted sources. Such subtle errors are considered more dangerous than fabricating a case outright, precisely because they are harder to detect.

The Burden on Legal Professionals

These hallucinations create a profound ethical and practical dilemma for lawyers. Professional duties of competence and supervision obligate them to verify every AI-generated output manually.

- Undercutting efficiency: This constant verification undermines the very efficiency gains AI is supposed to provide. Instead of a streamlined workflow, lawyers face an endless cycle of vigilance and fact-checking.

- Risk of malpractice: Lawyers who fail to perform this oversight risk neglecting their professional duties – and even malpractice claims, as seen in cases where attorneys cited fictional AI-invented precedents.

At present, the legal field cannot escape the need for human oversight. LLMs can draft, summarize, and structure – but lawyers remain ultimately responsible for accuracy.

Conclusion: Two Sides of the Same Coin

These two papers outline a clear axis in the conversation around legal AI. The optimistic perspective envisions LLMs as collaborative tools that expand access to justice by democratizing legal information. The cautious view, however, grounds the debate in the everyday reality of commercial tools, where hallucinations – though reduced – remain a fundamental obstacle. This shifts the human-in-the-loop role from collaborative drafting to painstaking supervision.

For legal tech to fulfill its promise, the industry must:

- Invest in transparency: Vendors should publish accuracy benchmarks and openly acknowledge limitations.

- Refine safeguards: More reliable retrieval systems and hallucination filters are essential.

- Reframe roles: LLMs should be framed as support tools, not substitutes, with humans firmly in control.

- Educate lawyers: Training on AI’s capabilities and risks must become part of ongoing professional development.

The future of legal AI will depend on striking this balance. While LLMs show immense potential, the continuing need for human accountability – and the lack of transparency in commercial systems – means that for now, the dream of effortless efficiency remains unrealized.

How can the legal tech industry bridge this gap and build tools that are both reliable and trustworthy?